Shift Paradigm acquires design and technology consultancy Principle Studios. Read more.

See the reflection of your business through the lens of data and technology.

Our Expertise

Growth

Consulting

Architecting actionable plans and strategic playbooks designed to solve business problems and deliver results.

Technology

Transformation

Engineering modern MarTech enterprise ecosystems that seamlessly connect technology and data.

Personalized

Experiences

Crafting omnichannel journeys and campaigns built to nurture and deepen lasting customer relationships.

Alchemy of

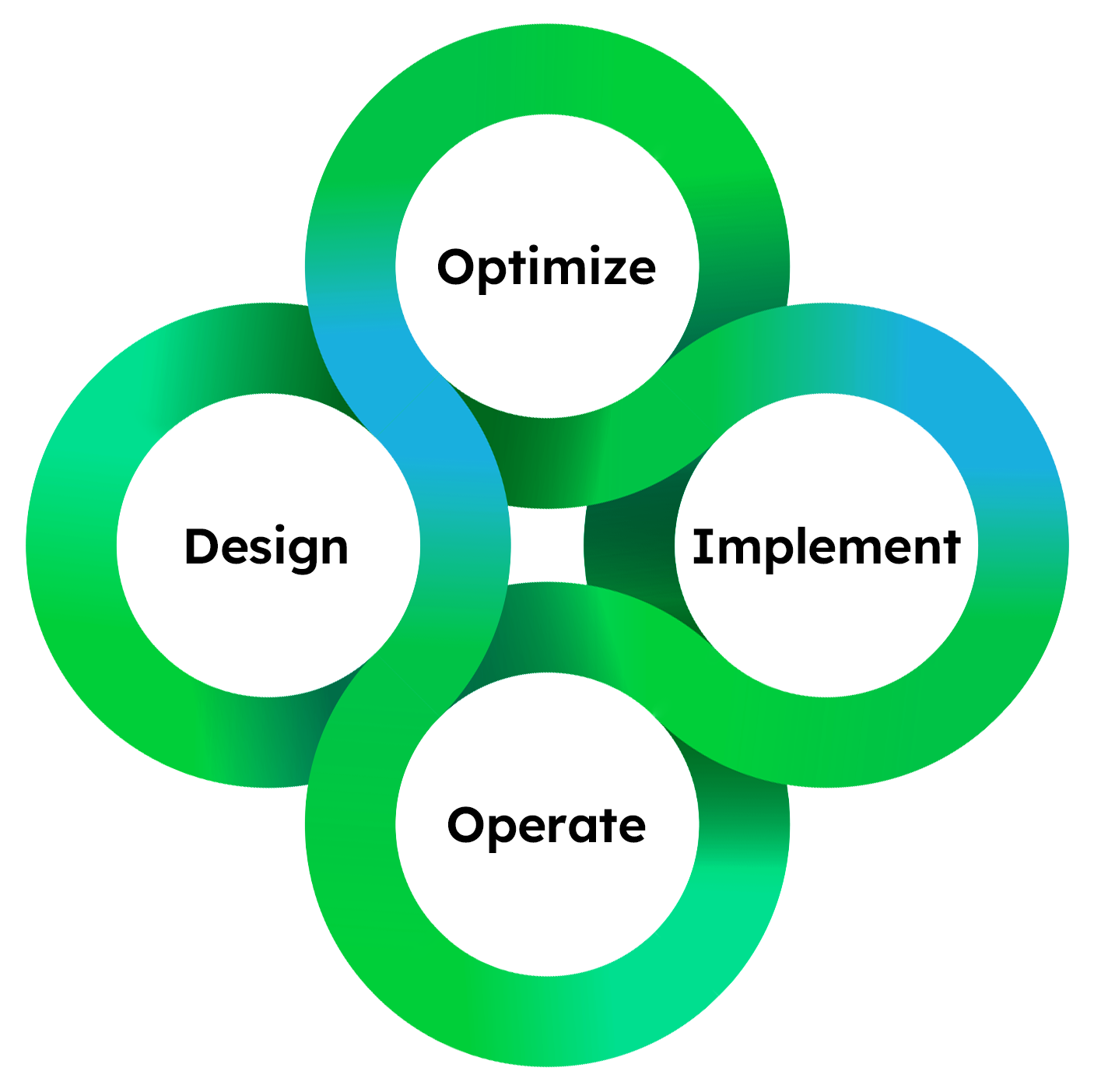

Our Approach

Our team of strategists, technologists and creatives craft tailored solutions to optimize your marketing and sales operations, driving measurable growth.

We deliver impactful results across revenue operations, full life-cycle campaigns and go-to-market strategies aligned around growth opportunities.

Shift Tech Navigator

66% of the average MarTech stack is underutilized. Partner with us to amplify your business growth and efficiency with the right tech and data stack in place.

PROVEN SUCCESS

How Repairing the Leaky Funnel for a Multinational MedTech Manufacturer Drove $12M in Immediate Revenue

See how we create a cohesive and streamlined lead generation approach to ensure optimal utilization of resources, while capitalizing on potential business growth.

Igniting Growth for Blue Chip Brands

Ready to make a shift?

Find out how we can support your growth.

INSIGHTS

Our Partners